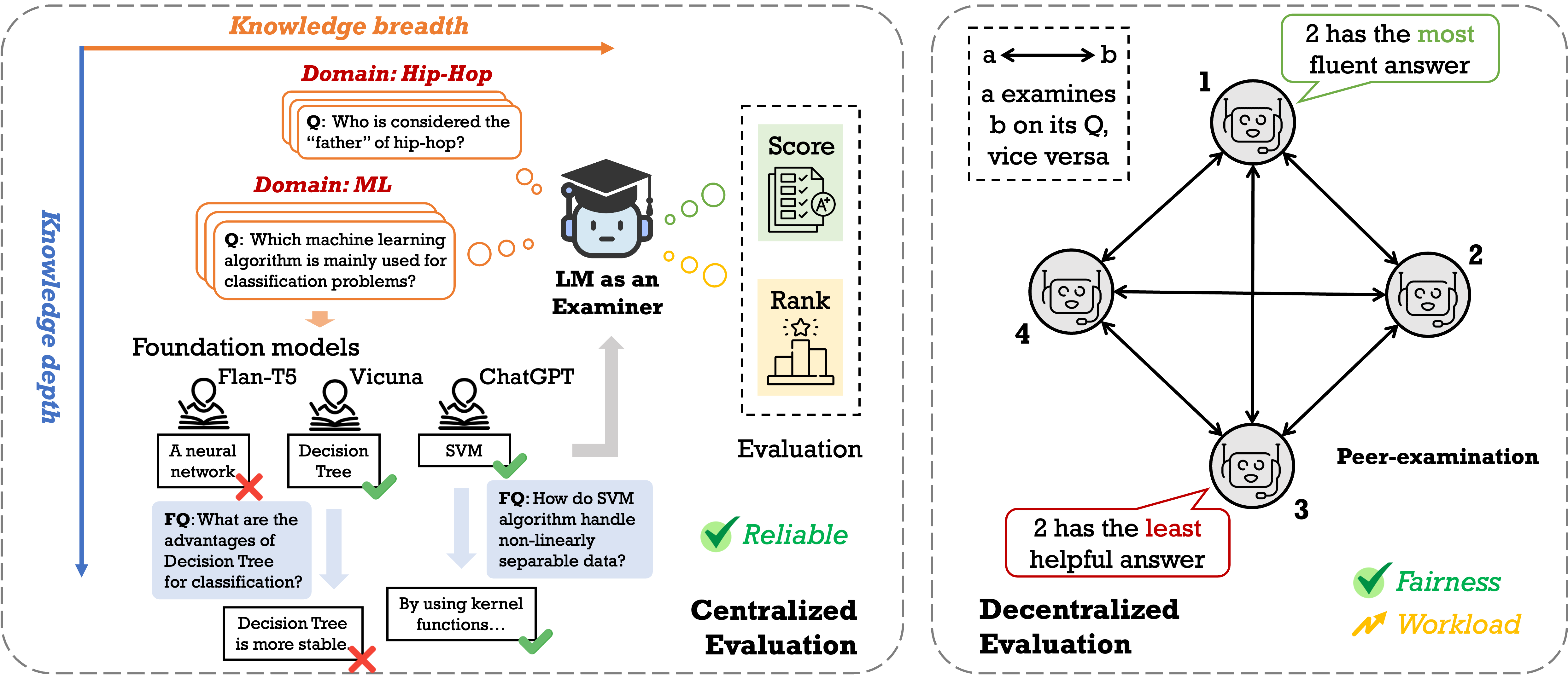

Numerous benchmarks have been established to assess the performance of foundation models on open-ended question answering, which serves as a comprehensive test of a model's ability to understand and generate language in a manner similar to humans. Most of these works focus on proposing new datasets, however, we see two main issues within previous benchmarking pipelines, namely testing leakage and evaluation automation. In this paper, we propose a novel benchmarking framework, Language-Model-as-an-Examiner, where the LM serves as a knowledgeable examiner that formulates questions based on its knowledge and evaluates responses in a reference-free manner. Our framework allows for effortless extensibility as various LMs can be adopted as the examiner, and the questions can be constantly updated given more diverse trigger topics. For a more comprehensive and equitable evaluation, we devise three strategies: (1) We instruct the LM examiner to generate questions across a multitude of domains to probe for a broad acquisition, and raise follow-up questions to engage in a more in-depth assessment. (2) Upon evaluation, the examiner combines both scoring and ranking measurements, providing a reliable result as it aligns closely with human annotations. (3) We additionally propose a decentralized Peer-examination method to address the biases in a single examiner.

@article{bai2023benchmarking,

title={Benchmarking Foundation Models with Language-Model-as-an-Examiner},

author={Yushi Bai and Jiahao Ying and Yixin Cao and Xin Lv and Yuze He and Xiaozhi Wang and Jifan Yu and Kaisheng Zeng and Yijia Xiao and Haozhe Lyu and Jiayin Zhang and Juanzi Li and Lei Hou},

journal={arXiv preprint arXiv:2306.04181},

year={2023}

}Click the button to random select 3 samples from LMExamQA.

GroundTruth: {{ sampledData[qindex]['groundtruth'] }}

{{lmlist[0]}}: {{ chartdata[0].text }}

{{lmlist[1]}}: {{ chartdata[1].text }}

{{lmlist[2]}}: {{ chartdata[2].text }}

{{lmlist[3]}}: {{ chartdata[3].text }}

{{lmlist[4]}}: {{ chartdata[4].text }}

{{lmlist[5]}}: {{ chartdata[5].text }}

{{lmlist[6]}}: {{ chartdata[6].text }}

{{lmlist[7]}}: {{ chartdata[7].text }}

Click the button to check a random set of multi-round QAs.

Q: {{ sampledFollowData[qindex]['question'] }}

A by {{lmlistOri[selectedFollow]}}: ✓ {{ sampledFollowData[qindex]['answer'] }}

Q: {{ sampledFollowData[qindex]['followingQuestion'] }}

A by {{lmlistOri[selectedFollow]}}: {{ sampledFollowData[qindex]['followingAnswer'] }}